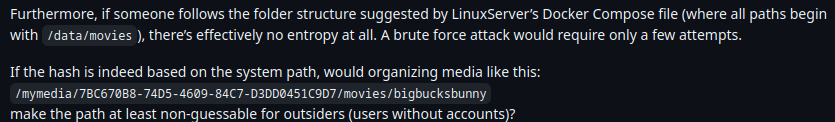

I don’t need to trust because I know how it works: https://github.com/jellyfin/jellyfin/blob/767ee2b5c41ddcceba869981b34d3f59d684bc00/Emby.Server.Implementations/Library/LibraryManager.cs#L538

Yes… exactly how I said it works. Notice the return.

return key.GetMD5();

It’s a hash, not a proper randomized GUID. But thanks for backing me up I guess? I wasn’t interested in posting the actual code for it because I assumed it wouldn’t be worth a damn to most people who would read this. But here we are.

They can’t. Without the domain, the reverse proxy will return the default page.

You are wrong, but at this point I’d have to educate you on a lot of stuff that I don’t have the time or care to educate you on. The tools are out there and it’s beside the point at all, proper auth fixes all the concerns. If it’s publicly accessible you have to assume that someone will target you. It’s pitifully simple for someone to setup a tool to scan ranges and find stuff(especially with SSL registrations being public in general, if I asked any database for all domains issued that start with “jf” or “jellyfin” or other common terms, I’d likely find thousands instantly). Shodan can and does also do domain stuff.

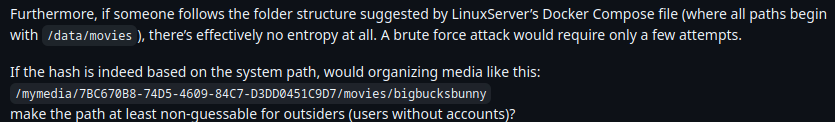

There are 2 popular Docker images, both store the media in different paths by default

So they’d only have to have 2 hashes for a file to hit the VAST MAJORITY OF PEOPLE WHO USE THE DOCKER. What an overwhelming hurdle to jump…

You do not have to follow the default path

Correct, but how many people actually deviate? Forget that most people will map INTO the container and thus conform to the mapping that the containers want to use. This standardizes what would have been a more unique path INTO a known path. This actually makes the problem so much worse.

The server does not even have to run in Docker

And? Many people are simply going to mount as /mnt/movies or other common paths. Pre-compiling md5 hashes with hundreds of thousands of likely paths that can be tested within an hour is literally nothing.

You do not know the naming scheme for the content

Sure, but most people follow defaults in their *arr suite… Once again… the up-front “cost” of precompiling a rainbow table is literally nothing.

It does not need to be similar, it needs to be identical.

Correct but the point that I made is that they would simply pre-build a rainbow table. The point would be that they would take similar paths and pre-md5 hash them. Those paths would be similar. Not the literal specific MD5 hash.

There are 1000s of variations you have to check for every single file name

Which is pitifully easy if you precompile a rainbow table of hashes for the files for in the name formats and file structures that are relatively common on plex/jellyfin setups… especially to mirror common naming formats and structures that are used in the *arr setups. you can likely check 1000 urls in the matter of a couple of seconds… Why wouldn’t they do this? (the only valid answer is that they haven’t started doing it… but could at any time).

My threat model does not include “angsty company worried about copyright infringement on private Jellyfin servers”.

Yes… let’s ignore the companies that have BOATLOADS of money and have done shit like actively attack torrents and trackers to find thousands of offenders and tied them up legally for decades. Yes, let’s ignore that risk all together! What a sane response! This only makes sense if you live somewhere that doesn’t have any reach from those companies… Even then, if you’re recommending Jellyfin to other people without knowing that they’re in the same situation as you. You’re not helping.

Why bother scanning the entire internet for public Jellyfin instances when you can just subpoena Plex into telling you who has illegal content stored?

I thought you knew your threat model? Plex doesn’t hold a list of content on your servers. The most Plex can return is whatever metadata you request… Except that risk now is null because Plex returns that metadata for any show on their streaming platform or for searches on items that are on other platforms since that function to “show what’s hot on my streaming platforms” (stupid fucking feature… aside) exists. So that meta-data means nothing as it’s used for a bunch of reasons that would be completely legitimate. The risk becomes that they could add code that does record a list of content in the future… Which is SUBSTANTIALLY LESS OF A RISK THAN COMPLETE READ ACCESS TO FILES WITHOUT AUTH but only if you guess the magic incantations that are likely the same as thousand of others magic incantations! Like I said though several times. I’d LOVE to drop plex, BECAUSE that risk exists from them. But Jellyfin is simply worse.

You seem wildly uneducated on matters of security. I guess I know now why so many people just install Jellyfin and ignore the actual risks. The funny part is that rather than advocating for fixing it, so that it’s not a problem at all… you’re waiving it all away like it could never be a problem for anyone anywhere at anytime. That’s fucking wildly asinine when proof of concept of the attack was published on a thread 4 years ago, and is still active today. It’s a very REAL risk. Don’t expose your instance publicly. Proxied or not. You’re asking for problems.

Thanks for admitting it. A few people simultaneously responded attacking my warning. So rereading my response to you, I recognize I was a bit more snarky than was warranted, and I apologize for that.

But yeah, 2fa (Even simple TOTP) baked in would go a long way too on the user front too.

It’s clear that Sony could just generate a rainbow table of hashes in MD5 with common naming conventions and folder conventions, make a list of 100k paths to check or what have you for their top 1000 movies… and then shodan(or similar tool) to finding JF instances, and then check the full table in a few hours… rinse repeat on the next server. While that alone shouldn’t be enough to prove anything, the onus at that point becomes your problem as you now have to prove that you have a valid license for all the content that they matched, they’ve already got the evidence that you have the actual content on your server, and you having your instance public and linkable could be (I’m not a lawyer) sufficient to claim you’re distributing. Like I can script this attack myself in a few hours (Would need a few days to generate a full rainbow table)… Put this in front of a legal team of one of the big companies? They’ll champ at the bit to make it happen, just like they did for torrents… especially when there’s no defense of printers being on the torrent network since it’s directly on your server that exists on your IP/domain.