Don’t be worse than Russia. Please fix.

- 0 Posts

- 165 Comments

build your own print server that gives you all the bells and whistles like AirPrint.

…why? CUPS is print server. You don’t need anything else.

Compression is mostly done in software.

21·1 year ago

21·1 year agoThey still can share code. Just not maintained by dma.

71·1 year ago

71·1 year agoand with rossman too.

I decided to read replies: wierd, they suggest accusation is overblown.

I decided to read context: WTF is this?! Unholy shit, dear Faust, what did I read? What a deflection!

I thought I was terminally online with mental disorders, but this makes me look most grass-touching and sanest person.

7·1 year ago

7·1 year agoMIT X11-style license

BSD on rust. Will meet same fate long term unless they move to GPL or more copyleft.

142·1 year ago

142·1 year agohttps://lore.kernel.org/lkml/293df3d54bad446e8fd527f204c6dc301354e340.camel@mailbox.org/

General idea seems to be “keep your glue outside of core subsystems”, not “do not create cross-language glue, I will do everything in my power to oppose this”.

7·1 year ago

7·1 year agoIt will take more effort than writing kernel from scratch. Which they are doing anyway.

101·1 year ago

101·1 year agoOnly one compiler nailed to LLVM. And other reasons already mentioned.

4·1 year ago

4·1 year ago“Follow rules to a letter” kind of sabotage manual.

31·1 year ago

31·1 year agoLol. They didn’t even publish weights since GPT2. And weights are not enough for open source.

Many lossless codecs are lossy codecs + residual encoders. For example FLAC has predictor(lossy codec) + residual.

If you “talk to” a LLM on your GPU, it is not making any calls to the internet,

No, I’m talking about https://en.m.wikipedia.org/wiki/External_memory_algorithm

Unrelated to RAGs

Just buy used PC. Same perf, lower price.

You can always uses system memory too. Not exactly an UMA, but close enough.

Or just use iGPU.

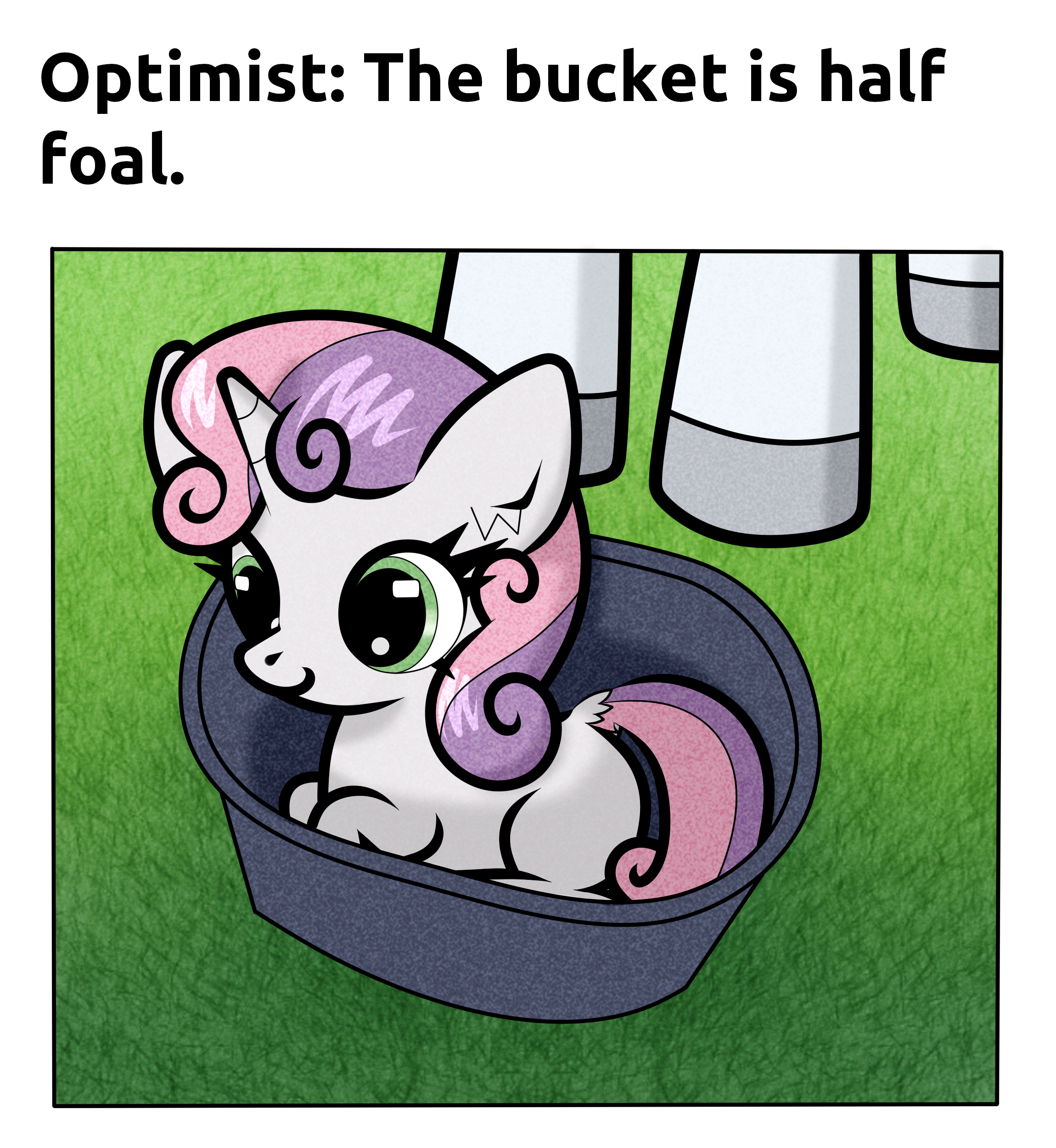

Such insensetivity!